Google single page application, how to crawl single page application, get googlebot to crawl my single page application, getting meta-tag data from javascript Google Single Page Apps can be really powerful. They are easier to maintain and a lot more intuitive than a traditional website. Search Engine crawlers are having issues crawling these single page apps and that is why they have come up with guidelines on how to build these Google Single Page Applications so that search engines can crawl them properly.

Google introduced a new search interface for mobile that uses a single-page application (SPA). This search interface is designed to improve the speed and user experience on mobile devices. As a result, Google emphasized the need for mobile SEO professionals to focus on building SEO URLs for their applications and to make sure these SEO URLs work properly with their apps.

Seo for single page apps

Google has introduced a new feature that allows you to optimize your website for single-page applications. The new feature is called “Single Page App Crawling” and it’s available on the Google Search Console.

This new tool is designed to help you optimize websites for crawlers that can’t navigate through multiple pages. This can be used by SEOs who want to make sure that their site is crawlable by search engines.

The tool was first spotted by Barry Schwartz at Search Engine Land. He also shared an example of how it’s used:

As you can see in this example, when you click on “Site Configuration”, it will bring up a pop-up window that shows different options for crawling your site. You can either allow Googlebot access to all pages or use one of the other options such as “Only allow access to the homepage”. There are also options where you can choose specific URLs that should be crawled or not crawled at all (for example, if there are links or scripts on a page that cause problems).

how to crawl single page application

The rise of single-page applications (SPAs) has led to some interesting challenges for search engine optimizers (SEOs). SPAs are websites that are written in JavaScript and built on top of a server-side framework such as Angular or React. They typically render everything on the client-side, which means that most crawlers don’t know what they look like unless they have actual users visiting the site. This is why Google recommends using structured data markup (JSON-LD) when possible with SPAs, so that crawlers can understand what content is available on the page without having to visit it first.

If you’re building a SPA and want to make sure it’s easily discoverable, here are three things you can do:

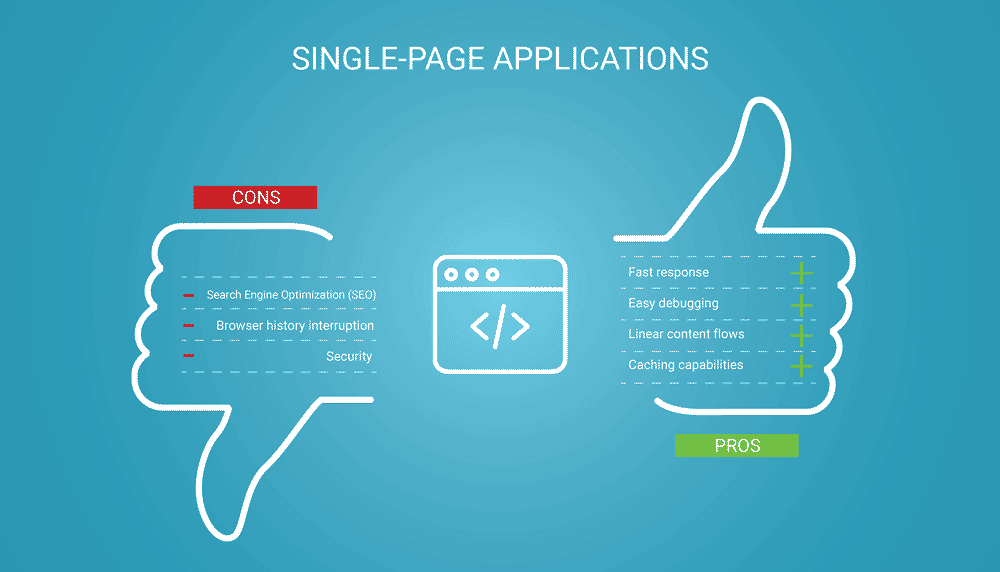

Top SEO Challenges for Single Page Applications

Single-page applications (SPAs) are becoming more and more popular in web development, especially with the rise of single-page e-commerce solutions such as Magento 2. As a result, SEO professionals need to understand how to optimize SPAs and ensure that their clients’ products and services are visible to search engines.

In this post we’ll cover some of the most common SEO challenges that arise from SPAs, including:

How to optimize content for search engines

Google is working on a new crawler dedicated to single page applications. The company is calling it the “Web Framework Fundamentals” team and it’s working to make Google search better at crawling and indexing SPAs (single-page applications).

The team posted a blog post on its progress so far, which you can see below:

Google says that “the current architecture for SPAs does not lend itself well to crawling and indexing.” In other words, Google can’t crawl SPAs because they’re just one big page with no clear way to separate out content.

Google describes its solution as a “new crawler dedicated to SPAs” that will “consume” the application’s DOM directly from the browser in order to understand how it should be indexed. That way, Google can determine what content belongs in its search results and what doesn’t.

Google’s crawler is designed to crawl the web. That is to say, it’s designed to crawl pages that are not single-page apps.

To crawl a single-page application, we need to make it behave like a normal website. This means making sure that our content is accessible without JavaScript and that it includes links to external resources (such as images or videos).

How to crawl single page application?

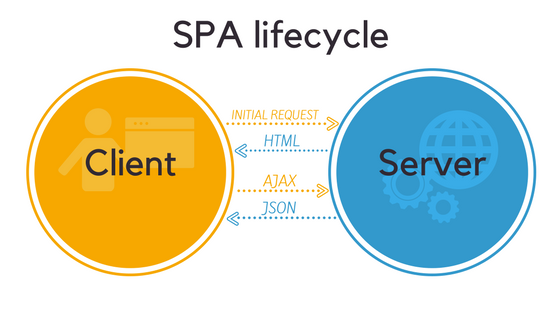

A single-page application (SPA) is a web application that fits on a single web page with the goal of providing a more fluid user experience similar to a desktop application. Single-page applications often use AJAX, but this is not a requirement.

Single-page applications can be difficult for search engines to index because they only have one entry point which can make it hard to identify the main content. If you want your SPA to be crawled correctly, follow these best practices:

Use rel=”canonical” and rel=”alternate” tags

If your SPA has multiple URLs, use rel=”canonical” tags on each page with the canonical URL as its value. This tells search engines which page to show in results when someone searches for terms related to your site.

Use hreflang tags

If you have multiple languages or country versions of your site, use hreflang tags in order for search engines to show the correct version of your website in different language markets.

Google is the most popular search engine in the world. In this article we will discuss how to crawl a Single Page Application (SPA).

Sites with single page applications have many URLs but only one HTML document, which is loaded dynamically as the user navigates between pages. This means that crawlers are unable to see what the site looks like until it has been completely loaded, which may take several seconds or even minutes on slow networks.

In order to make your SPA discoverable by crawlers and indexable by Googlebot, you can use a special version of your site that allows us to see all of its content immediately. You can do this using a technique called server-side rendering (SSR) or universal rendering.

Crawling a single-page application (SPA) is a bit more complicated than crawling a traditional website.

SPAs are applications that load all their content dynamically, on demand. This means that the page you see in your browser is not the same as what Google sees. Instead, Googlebot needs to execute JavaScript on your page to understand and interpret its content.

The good news is that Google has been working hard to improve how it crawls SPAs. In this article, we’ll show you how to optimize your SPA for Googlebot and help it deliver the best experience for users and search engines alike.

If you want to crawl a single-page application (SPA), you will need to take extra steps.

The first step is to identify how the pages are loaded, and then use that information to create a site map. The second step is to create an indexable version of the HTML that can be crawled by search engines.

When crawling a SPA, you should still try to identify the individual pages on the site. Some SPAs load content dynamically, which means there’s no need for separate pages. However, many SPAs load content based on user behavior or selected filters. In this case, you’ll need to inspect the source code of each page so that you can identify any URLs that might be useful when building your site map and indexing strategies.

The Single Page Application is a website that is built using Javascript to load pages dynamically, rather than using static HTML. The Single Page Application is written in Javascript and uses AJAX calls to retrieve data from the server.

The main benefit of using Single Page Applications is that it allows for much faster page loads and better user experience. This is because the browser only has to load the first page, instead of downloading all the content at once.

As you may have noticed, Google can’t crawl a single page application very well, so there are some things we need to do:

Make sure your website has a sitemap with all links pointing to static HTML pages (for example “/blog/blog-post-1″) Add rel=”canonical” tags on every link pointing to a dynamic URL (for example “rel=”canonical” href=”http://example.com/blog/blog-post-1″) Add HSTS headers (HTTP Strict Transport Security)